Core Architecture

As of Aug 10, 2025

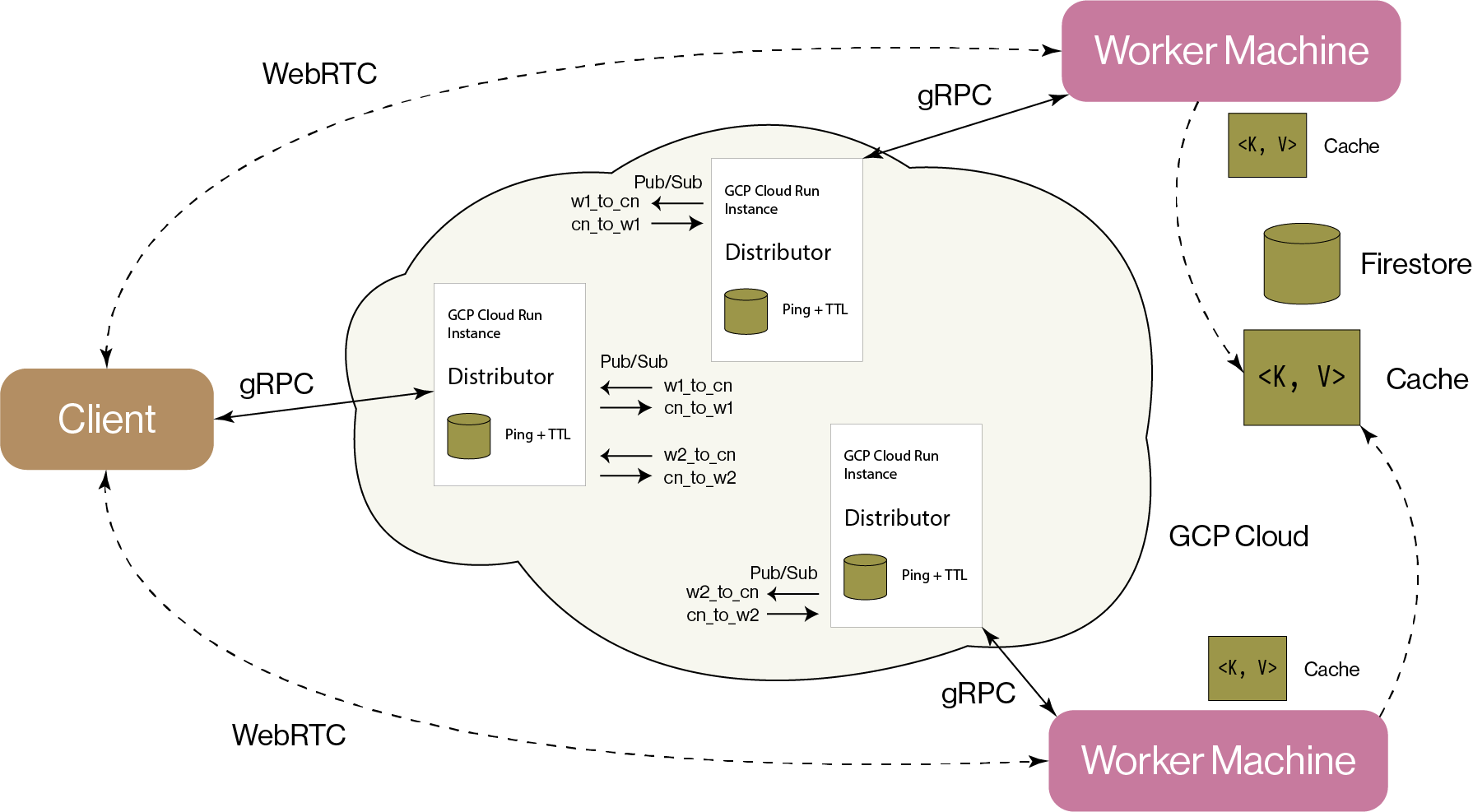

There are three main parts to the Caravan architecture: the distributor, the client, and the worker. The distributor helps establish peer connections between the client and worker(s). Once peer connections begin, the client can directly communicate with the worker for all future remote calls.

A Caravan Interaction

Here's how a typical Caravan interaction functions. Details on where implementations live in the codebase are omitted in this overview.

Worker Registration

The distributor is built as a gRPC web server using the tonic crate. Workers may register

themselves with the distributor through the CLI. These may be inside

the public group (available to all users) or private groups that only select clients may access

via a sharing key. When a worker wishes to become available, they may run

caravan startwhich establishes a bidirectional streaming gRPC connection with the distributor. Clients may now request worker machines from the distributor.

Client Connection Requests

Using the sharing key for a private group, a client may request peer connections from accessible worker machines from the distributor (I'm actually not a huge fan of the way the builder pattern looks in Python compared to Rust so these will just become named parameters in the future):

from caravan import Caravan

caravan = Caravan()

caravan.group("speech").email("prabhune@berkeley.edu").key("BYxUTe7n").gpu_count(1).build()This request will attempt to make two calls:

- Requesting available worker machine IDs satisfying the query.

- Negotiating peer connections with each such worker machine using its ID.

Peer Connection Negotiation

Peer connections are negotiated using the WebRTC protocol. Relevant links (can review after):

- WebRTC for the Curious: Protocol information in a friendly format, easy to read with a breakdown of the other bundled protocols.

- WebRTC IETF RFC 8825: Published RFC for an overview of the WebRTC protocol.

- WebRTC Data Channels IETF RFC 8831: Addendum RFC for WebRTC data channels, a relevant section is 6.6: Transferring User Data on a Data Channel.

- WebRTC Mozilla Docs: Simple docs for the negotiation pattern.

- WebRTC Javascript Examples: Super helpful use case implementations

from the original Google implementors. Specifically see Generate and transfer data

and the corresponding Rust example (note the

--releasewhen building the Rust code). - WebRTC Rust Crate: the Rust implementation of WebRTC that is used in Caravan.

In the WebRTC implementation, the initial negotiation process requires a "signaling server" that relays client messages to the worker and vice versa. In our case, we use the distributor for this purpose. Naively, this looks like the following:

However, when the distributor is hosted on the cloud, autoscaling will spin up distributor instances that are essentially isolated from each other out of the box. We can modify our architecture to pass messages using Pub/Sub between distributor instances and correctly relay messages:

From here, we use the standard peer connection negotiation process. Clients are designated

impolite peers (always starting the negotiation), and workers are polite peers (always receiving offers).

For additional details, please see the

WebRTC Mozilla Docs.

-

Client generates an offer. This offer consists of a local Session Description Protocol (SDP), which contains all the data formats (e.g. audio OPUS, video VP8) that this peer is willing to exchange. The client then sends this offer to the worker through the signaling server.

-

The worker receives the offer and generates an answer SDP that may exclude some of the data formats that the client offered with. Both peers now are aware of mutually compatible data types.

-

The client receives the answer. At this point, both the worker and the client begin sending each other ICE candidates, which represent possible addresses that each peer is available on. We omit some details here, but this process is automated and packaged into the

STUN/TURN/ICEprotocols. -

As ICE candidates are received on each side, ICE ping packets are sent to establish data channel connectivity. Once the connection is established, the data channel can be treated as a simple socket (with some caveats) and we may begin sending data.

-

During normal business logic, peers continue sending ICE candidates, and if a better candidate pair is found, the data channel connection is replaced under the hood for the remainder of the session.

The negotiation process is implemented for the client and the worker using typestates (see Will Crichton's Type-Driven API Design). Here is an example state machine diagram for the client process:

PyTorch Bijection

Once a client is connected to one or more GPUs, we now need to biject (remap) each PyTorch function

to an equivalent function that enables remote calls through the established peer connections. Given that

this is a more involved process than the well-documented high-level WebRTC negotiation, we omit the full

documentation here for brevity. In short, each PyTorch function turns into a new function that will

inspect the parameters and the return type to determine whether a remote call should be made.

From here, users may use their PyTorch program as normal.